With the release of a Tesla patent last year, we gain valuable insight into the inner workings of Tesla’s Full Self-Driving (FSD) technology. SETI Park, a patent analysis expert, also highlighted this particular patent on X.

This patent delves into the fundamental technology behind Tesla’s FSD, offering a comprehensive understanding of how FSD processes and interprets data.

To simplify the explanation, we will break down the patent into sections and explore how each section contributes to the functionality of FSD.

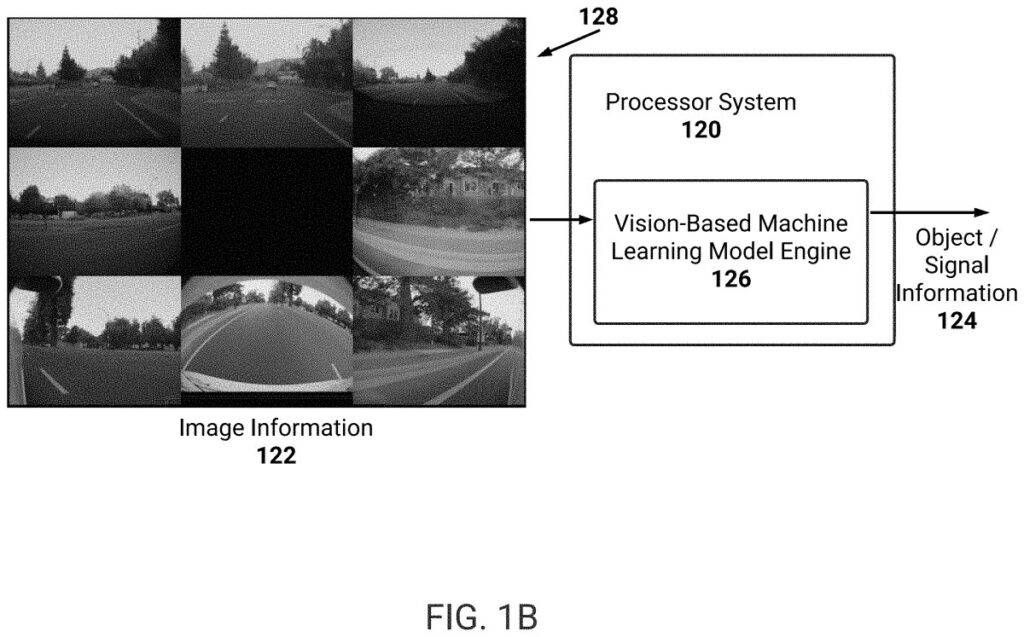

Vision-Based System

The patent outlines a vision-only system, aligning with Tesla’s aim to equip vehicles with the ability to perceive, comprehend, and engage with their surroundings. This system comprises multiple cameras, some with overlapping coverage, providing a 360-degree view around the vehicle, surpassing human capabilities.

Of particular interest is the system’s ability to swiftly adapt to the varying focal lengths and perspectives of the cameras, merging the data to create a coherent picture—this aspect will be discussed further.

Branching Structure

The system is bifurcated into two branches – one dedicated to Vulnerable Road Users (VRUs) and the other encompassing all other objects. The division is straightforward, with VRUs including pedestrians, cyclists, animals, and other entities at risk of harm. The non-VRU branch focuses on objects like cars, emergency vehicles, and debris.

This segmentation enables FSD to identify, analyze, and prioritize specific objects, giving precedence to VRUs within the Virtual Camera system.

Virtual Camera Framework

Tesla processes the raw imagery captured by the cameras, segregates it into VRU and non-VRU branches, and extracts essential information for object detection and classification.

The system projects these objects onto a 3D plane and generates “virtual cameras” at different elevations. These virtual cameras serve as perspectives through which the system analyzes the environment. The VRU branch’s camera operates at human height for better comprehension of VRU behavior, while the non-VRU branch’s camera is positioned higher to provide a broader view of traffic.

This dual-input mechanism equips FSD with pedestrian-level and panoramic road-level perspectives for analysis.

3D Mapping Integration

All the data collected is amalgamated to maintain an accurate 3D map of the surroundings. The synchronized virtual cameras, in conjunction with the vehicle’s sensors, incorporate movement data such as speed and acceleration into the 3D mapping process.

This intricate system is best visualized through the FSD display, which tracks numerous moving objects like cars and pedestrians. Each object carries a set of properties, aiding in distance estimation, movement direction, speed, and other parameters essential for navigation.

The Temporal Indexing feature allows the system to analyze images over time, enabling object path prediction and enhancing situational awareness. Video Modules act as the analytical engine, tracking image sequences and estimating object velocities and trajectories.

End-to-End Training

The patent mentions that the entire system, from start to finish, undergoes comprehensive training, now incorporating end-to-end AI. This training approach optimizes system performance by enabling individual components to learn how to effectively interact with one another.

In Conclusion

Essentially, Tesla views FSD as the brain of the vehicle, with the cameras serving as its eyes. The system possesses memory that aids in categorizing and analyzing visual data, enabling it to predict object movements and chart optimal paths. FSD operates akin to human cognition but with the ability to track an extensive array of objects and their properties with precision and efficiency.

The FSD vision-based camera system constructs a dynamic 3D map of the road, continuously updated to inform decision-making processes.